Department 1: Algorithms and Complexity

Headed by Prof. Danupon Na Nongkai, PhD.

The department investigates a broad range of theoretical and practical aspects of modern algorithmics. We design new algorithms and algorithmic techniques, analyze their efficiency and the quality of their solutions, develop provably efficient and correct software, and package our programs in software libraries. The strength of our approach lies in the fact that we consider these aspects in unity and not in isolation.

Research Areas

- Weitere Informationen

Algorithmic Game Theory

mehrIn the problems we consider in this group, we usually try to optimize some goal function while dealing with selfish agents that may have separate and conflicting goals, and that may lie to us in order to improve their own goal function. In algorithmic mechanism design, we ensure that it is in the best interest of the agents to tell us the truth. We also examine the price of anarchy and price of stability for various problems.

- Weitere Informationen

Clustering

mehrClustering refers to the partitioning of a set of objects into groups (clusters) of similar objects. Due to its wide-spread applicability, it is among the most fundamental tasks in data analysis and unsupervised learning. Our goal is to design algorithms with provable guarantees, for example, with respect to running time, space consumption, or quality of the solution. Complementing this, we are also studying the limits of such algorithms.

- Weitere Informationen

Distributed Computing

mehrBroadly speaking, distributed computing concerns systems that consist of multiple agents that act based on local information. The main challenge is typically how agents gain access to sufficient information to collaboratively solve a task quickly, despite limits on communication, faults, or inaccurate data.

- Weitere Informationen

Fine-Grained Complexity and Algorithm Design

mehrFine-grained Complexity Theory is the design of reductions that prove running time lower bounds assuming a plausible complexity-theoretic conjecture such as the Strong Exponential Time Hypothesis. In this area the design of efficient algorithms goes hand in hand with proving fine-grained lower bounds: our goal is to prove matching upper and lower bounds, thus establishing best-possible algorithms (with the correct constants in the exponent).

- Weitere Informationen

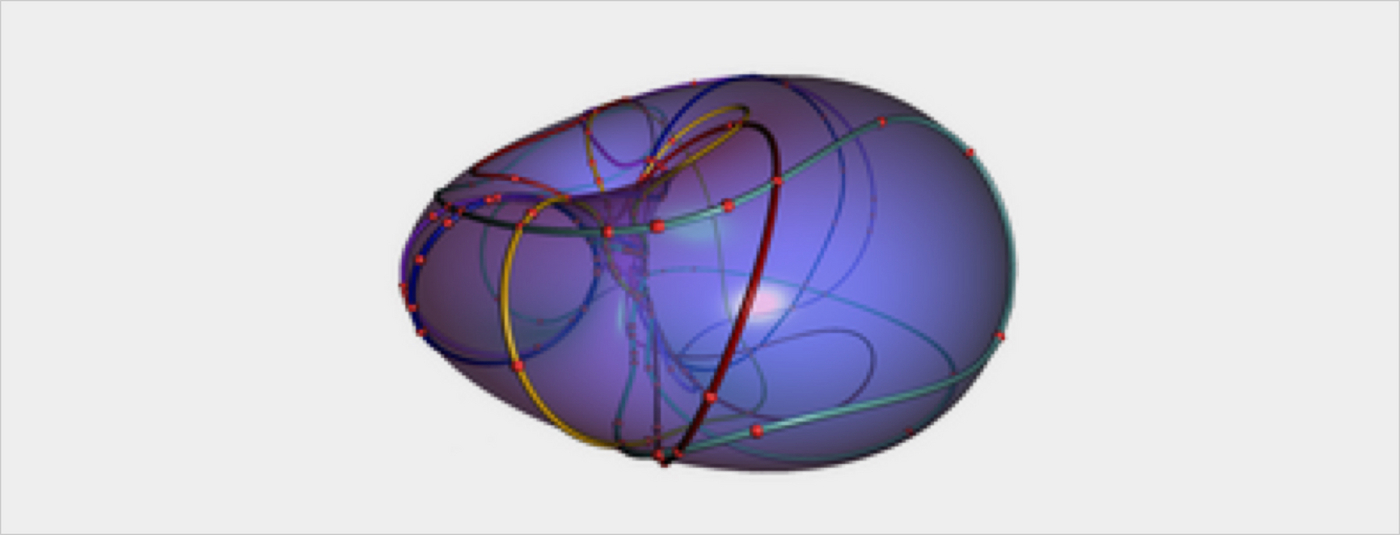

Graph Algorithms

mehrOur long-term vision is to develop techniques for designing efficient graph algorithms and use them to understand graph data. We currently focus on algorithms that work across many models of computation, such as dynamic, distributed, streaming, parallel, and quantum algorithms. We aim to achieve two goals simultaneously: (i) solutions to notorious long-standing open problems, and (ii) efficient algorithms that can fully exploit both the features of modern computing devices and the characteristics of contemporary data.

- Weitere Informationen

Learning-Augmented Algorithms

mehrLearning-augmented algorithms (also known as algorithms with predictions) attempt to go beyond worst-case bounds of classic algorithms by accessing an oracle that offers possibly imperfect predictions. The oracle could be, e.g., a trained machine-learning model, which tries to predict a partial solution or a yet unknown portion of the input. The challenge is to use the oracle in a robust way, i.e., to retain the best classic worst-case guarantees while achieving optimal performance for accurate predictions.

- Weitere Informationen

Optimization

mehrMany real world applications are naturally formulated as combinatorial optimization problems, i.e. problems of finding the best solution(s) out of a finite set. Various methods have been developed to tackle such problems: integer programming, fixed-parameter tractable and exact algorithms, approximation algorithms and combinatorial algorithms, among others. D1 works on applying these methods to various problems from different areas, ranging from bioinformatics to geometry, to scheduling, and several others.

- Weitere Informationen

Parameterized and Counting Algorithms and Complexity

mehrParameterized complexity analyzes how different parameters of the input influence the complexity of hard algorithmic problems. The general goal is to show with fixed-parameter tractability results that the combinatorial explosion can be confined to certain well-defined parameters, or to understand why such algorithms are not possible. An interesting class of hard problems that we consider are counting problems (where the goal is to count all solutions), as they may allow for interesting phenomena that are not observed in the corresponding decision problems.

- Weitere Informationen

String Algorithms and Data Compression

mehrStrings (texts, sequences) appear everywhere in our daily lives, and they constitute some of the biggest datasets generated by humanity (such as the petabytes of genomic data). We develop cutting-edge algorithms for processing huge strings of all kinds, focusing on classical problems such as measuring the similarity of two strings or finding all fragments of a long text that closely resemble a given pattern. We design our algorithms for multiple modern models of computation capable of handling big data. In particular, we develop compression schemes efficiently supporting basic computational tasks on the repetitive sequences.

Overview

- People

-

Research

mehr

What we work on, who works in which area, and the most recent Biennial Report.

-

Offers

mehr

Positions, Long Term Visits, Postdoc Positions, Ph.D. Applications, Internships and other Offers.

-

Teaching

mehr

Lectures, Seminars, Bachelor and Master Theses.

- Seminars

-

Activities

mehr

Visitors and newcomers to the department.

-

Publications

mehr

PhD Theses, Diploma Theses, Publications of Group Members.

-

ADFOCS

mehr

Advanced Course on the Foundations of Computer Science