Human Activity Recognition

Discovery of Everyday Human Activities From Long-term Visual Behaviour Using Topic Models

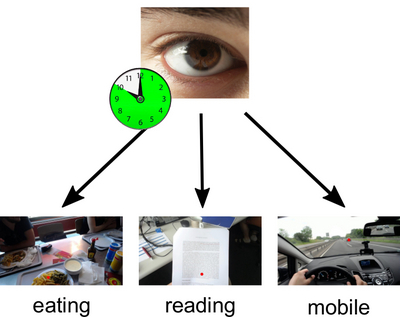

Human visual behaviour was demonstrated to have significant potential for activity recognition and computational behaviour analysis but previous works focused on supervisedmethods and recognition of predefined activity classes basedon short-term eye movement recordings. We propose a fully unsupervised method to discover users’ everyday activitiesfrom their long-term visual behaviour. Our method combines a bag-of-words representation of visual behaviour encoding saccades, fixations, and blinks with a latent Dirichlet allocation (LDA) topic model. We further propose and evaluate different methods to encode saccades for use in the topic model. We evaluate our method on a novel long-term gaze dataset that contains full-day recordings of natural visual behaviour of 10 participants (more than 80 hours in total). We also provide annotations for eight sample activity classes: outdoor, social interaction, concentrated work, mobile, reading, computer work, watching media, and eating. In addition, we include periods with no specific activity. We show the ability of our method to model and discover these activities with competitive performance to previously published supervised methods.

MPII Cooking Composite Activities

This dataset provides a set of 41 composite cooking activities to be detected in high resolution recordings.

MPII Cooking Activities Dataset

This dataset provides a set of 65 cooking activities to be detected in high resolution recordings.

Activity Spotting & Composite Activities

This research area involves the investigation of several aspects of activity spotting, revealing occurred activities within a large continuos data stream. While activity spotting focuses on short atomic activities another class of activities investigated by our research group are complex activities. Typically, such activities are composed of several layers of subordinated activities.