Sequential Bayesian Model Update under Structured Scene Prior

Semantic road labeling is a key component of systems that aim at assisted or even autonomous driving. Considering that such systems continuously operate in the real-world, unforeseen conditions not represented in any conceivable training procedure are likely to occur on a regular basis. In order to equip systems with the ability to cope with such situations, we would like to enable adaptation to such new situations and conditions at runtime.Existing adaptive methods for image labeling either require labeled data from the new condition or even operate globally on a complete test set. None of this is a desirable mode of operation for a system as described above where new images arrive sequentially and conditions may vary.We study the effect of changing test conditions on scene labeling methods based on a new diverse street scene dataset. We propose a novel approach that can operate in such conditions and is based on a sequential Bayesian model update in order to robustly integrate the arriving images into the adapting procedure.

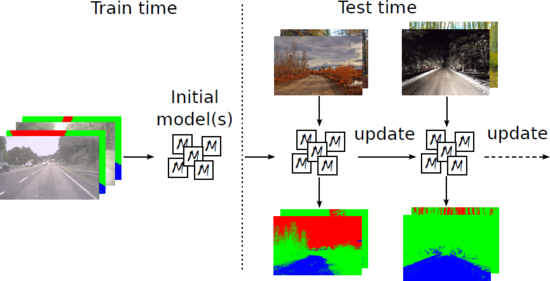

Method Overview

In order to robustly integrate new information at test time we propose a new Sequential Bayesian Model Update, which maintains a set of models via Particle Filter under the assumption of stationary label distribution.

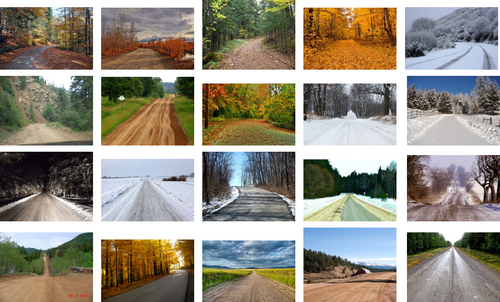

New Diverse Road Scenes Dataset

We have collected a new road scenes dataset which exhibits a large variety of visual conditions. We searched over the Internet, and particularly considered Flickr, and this resulted in a collection of 220 images, about half of which represent roads in autumn conditions and another half - roads in winter conditions.

We performed pixel-wise hand labeling of the gathered images into three classes: road (blue), sky (red), and background (green).

You can download the dataset here. It contains both train and test set used in the paper.

Code

The code for our method is written in pure C++11 (we used g++ 4.7.2) and doesn't have any compulsory dependencies (except for libpng, but it should be in your system by default). We tested it only on Linux, but it has cmake build system, so it should be possible to generate project and compile it on other platforms.

By default, our code uses OpenMP, but you can switch it off. In our experiments we also used the code of Fully Connected CRFs project. It is strongly recommended that you also download and compile their code.

Compiling and usage instructions can be found in the archive. In our experiments we randomly permuted test set, the archive contains these permuted indices. You can download the code here.

In our experiments we used features from this project. The authors kindly agreed to let us distribute the code for feature transform. You can download the package here. It depends on OpenCV. Compiling instructions can be found inside the archive.