Non-rigid Tracking from Depth and RGB Video

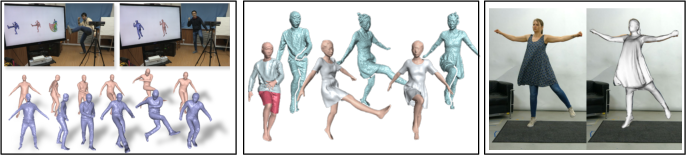

Non-Rigid tracking: Left: DoubleFusion [1] combines free-form online reconstruction and tracking with model based fitting to robustly reconstruct human motion and non-rigid geometry in real-time. Middle: SimulCap [2] tracks the human body and each garment using separate surfaces, and integrates physics based simulation. Right: LiveCap [4] can recover non-rigid clothing deformations from a single RGB camera.

NOTE: For more information and full list of publications visit the Real Virtual Humans site: https://virtualhumans.mpi-inf.mpg.de

While human motion is mostly articulated, clothing dynamics are non-rigid and require special treatment. Although non-rigid reconstruction and tracking has been demonstrated using free-form volumetric methods like DynamicFusion, the methods fail to robustly track humans and clothing. Model-based methods which fit a statistical body model to depth data are more robust but are limited to capturing only articulation.

In DoubleFusion [1], we combine free-form volumetric dynamic reconstruction with model based fitting. DoubleFusion, is the first real-time system which can simultaneously reconstruct detailed geometry, non-rigid motion and the body shape under clothing from a single depth camera. A pre-defined node graph on the body surface parameterizes the non-rigid deformations near the body, and a free-form dynamically changing graph parameterizes the outer surface layer far from the body, which allows more general reconstruction. Moreover, the inner body shape is optimized online and is forced to fit inside the outer surface layer. Overall, our method enables increasingly denoised, detailed and complete surface reconstructions, fast motion tracking performance and plausible inner body shape reconstruction in real-time. A real-time demo was shown at CVPR’18.

Although DoubleFusion can track human motion and clothing jointly, it has two limitations. The first one is that it uses a single surface to represent the visible skin and the clothing. The second is that it can only track non-rigid deformations that are visible in the depth image. In SimulCap [2], we draw inspiration from our previous work [9], and model the garments and body as separate surfaces. In addition, we track clothing motion by means of physics based simulation. This marries physics based simulation with pure capture methods, which allows to incorporate physical constraints, and allows to recover the motion of the invisible parts (e.g. the back part of the body when facing the camera).

While depth cameras facilitate the problem, they are less ubiquitous than RGB cameras. Hence, we have also explored methods to recover non-rigid motion from RGB videos alone. We have shown real-time non-rigid tracking of general objects [3], and humans in clothing [4].

References

[1] DoubleFusion: Real-time Capture of Human Performances with Inner Body Shapes from a Single Depth Sensor

T. Yu, Z. Zheng, K. Guo, J. Zhao, Q. Dai, H. Li, G. Pons-Moll and Y. Liu

IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR 2018), 2018

[2] SimulCap : Single-View Human Performance Capture with Cloth Simulation

Tao Yu, Zerong Zheng, Yuan Zhong, Jianhui Zhao, Dai Quionhai, Gerard Pons-Moll, Yebin Liu

in IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2019.

[3] NRST: Non-rigid Surface Tracking from Monocular Video

M. Habermann, W. Xu, H. Rohdin, M. Zollhöfer, G. Pons-Moll and C. Theobalt

Pattern Recognition (GCPR 2018)

[4] LiveCap: Real-time Human Performance Capture from Monocular Video

M. Habermann, W. Xu, M. Zollhöfer, G. Pons-Moll and C. Theobalt

ACM Transactions on Graphics, 2019