Pictorial Structures Revisited: People Detection and Articulated Pose Estimation

M. Andriluka, S. Roth, B. Schiele. Pictorial Structures Revisited: People Detection and Articulated Pose Estimation.

IEEE Conference on Computer Vision and Pattern Recognition (CVPR'09), Miami, USA, June 2009.

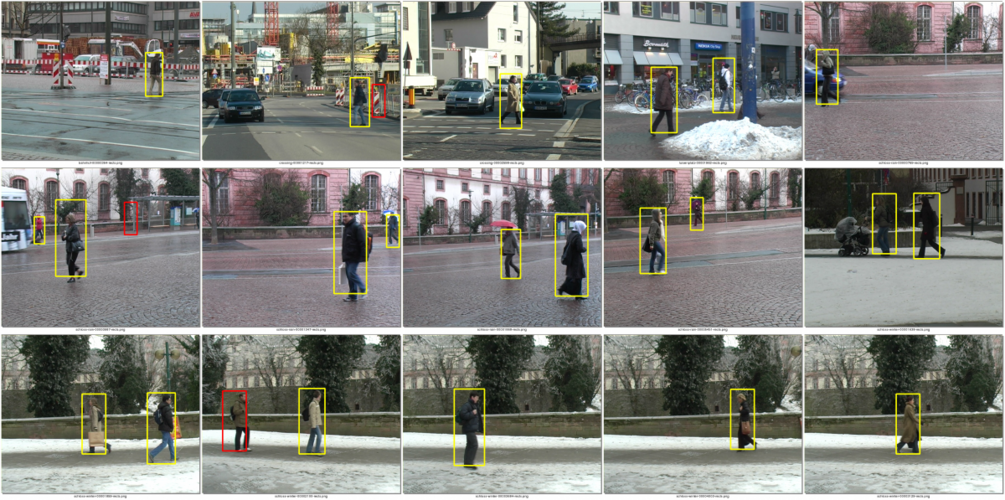

Paper | Slides |Example Results | Source Code | TUD-UprightPeople Dataset | Contact Us

Abstract:

Non-rigid object detection and articulated pose estimation are two related and challenging problems in computer vision. Numerous models have been proposed over the years and often address different special cases, such as pedestrian detection or upper body pose estimation in TV footage. This paper shows that such specialization may not be necessary, and proposes a generic approach based on the pictorial structures framework. We show that the right selection of components for both appearance and spatial modeling is crucial for general applicability and overall performance of the model. The appearance of body parts is modeled using densely sampled shape context descriptors and discriminatively trained AdaBoost classifiers. Furthermore, we interpret the normalized margin of each classifier as likelihood in a generative model. Non-Gaussian relationships between parts are represented as Gaussians in the coordinate system of the joint between parts. The marginal posterior of each part is inferred using belief propagation. We demonstrate that such a model is equally suitable for both detection and pose estimation tasks, outperforming the state of the art on three recently proposed datasets.

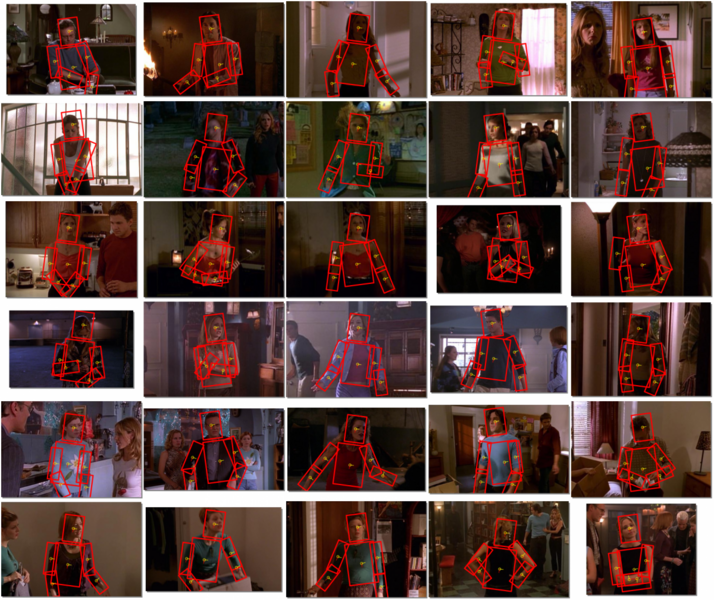

Upper Body Pose Estimation ("Buffy" Dataset)

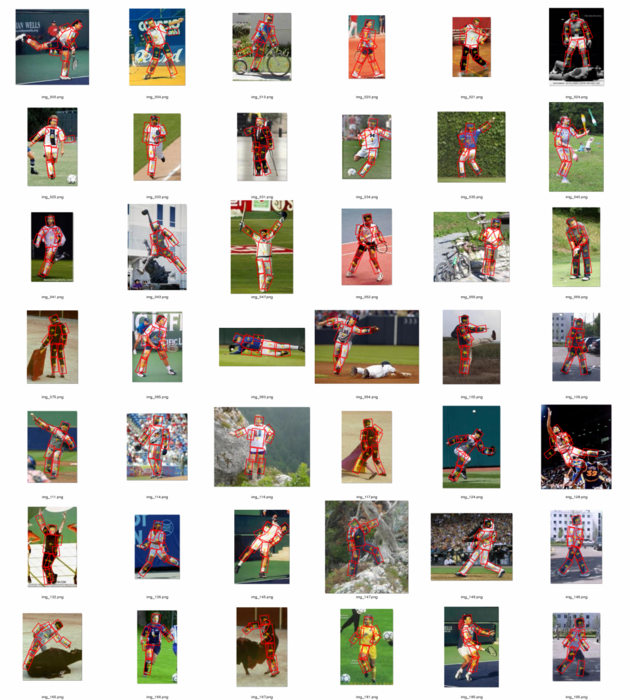

Full Body Pose Estimation ("People" Dataset)

Source code:

The source code and experiments package are now publicly available. The experiments package contains the pre-trained models, configuration files and the "TUD-UprightPeople" dataset used in the experiments described in the paper. Please see the file README.txt which accompanies the source code for more details.

Contact:

For further information please contact the authors: Micha Andriluka, Stefan Roth and Bernt Schiele.