Object Disambiguation for Augmented Reality Applications

Wei-Chen Chiu1, Gregory S. Johnson2, Daniel Mcculley2, Oliver Grau2 and Mario Fritz1

Max Planck Institute for Informatics, Saarbrücken, Germany1

Intel Corporation2

Abstract

The broad deployment of wearable camera technology in the foreseeable future offers new opportunities for augmented reality applications ranging from consumer (e.g. games) to professional (e.g. assistance). In order to span this wide scope of use cases, a markerless object detection and disambiguation technology is needed that is robust and can be easily adapted to new scenarios. Further, standardized benchmarking data and performance metrics are needed to establish the relative success rates of different detection and disambiguation methods designed for augmented reality applications.

Here, we propose a novel object recognition system that fuses state-of-the-art 2D detection with 3D context. We focus on assisting a maintenance worker by providing an augmented reality overlay that identifies and disambiguates potentially repetitive machine parts. In addition, we provide an annotated dataset that can be used to quantify the success rate of a variety of 2D and 3D systems for object detection and disambiguation. Finally, we evaluate several performance metrics for object disambiguation relative to the baseline success rate of a human.

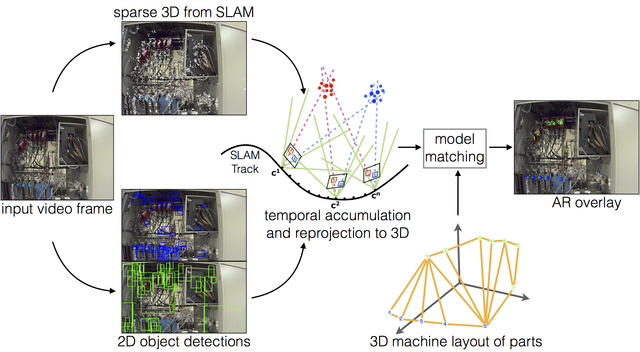

Overview of our system for object disambiguation.

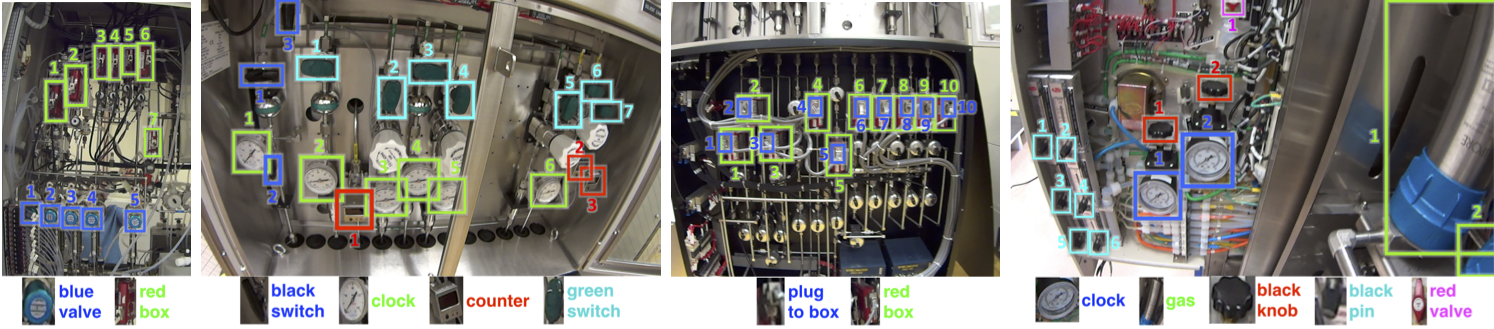

We present the first annotated dataset that allows to quantify performance on a object disambiguation task as it frequently occurs in augmented reality settings and assistance for maintenance work. The dataset captures 4 machines composed of 13 components. Each machine is built of a subset of these potentially repeating components that occur in different spatial arrangements. We provide 14 videos with different viewing scenarios. For each videos we provide human annotation on every 60 frames (at 30fps), with in total 249 frames annotated and 6244 object annotations that specify the type as well as a unique identity. The Object Disambiguation DataSet (ObDiDas) in the paper is available here [96.6GB].

Example images for the dataset. In each image we use different color codes for different classes of machine parts. And each instance of the machine parts are labelled with unique identities of the machine.

Supplementary Video

References

Wei-Chen Chiu, Gregory S. Johnson, Daniel Mcculley, Oliver Grau and Mario Fritz, "Object Disambiguation for Augmented Reality Applications," in 25nd British Machine Vision Conference (BMVC), Nottingham, UK, Sept 01-05, 2014.

@inproceedings{walon2014bmvc,

Author = {Chiu, W.C. and Johnson, G. and Mcculley, D. and Grau, O. and Fritz, M.},

Title = {Object Disambiguation for Augmented Reality Applications},

Booktitle = {Proceedings of the British Machine Vision Conference (BMVC)},

Year = {2014},

Location = {Nottingham, UK}

}