Gaze Embeddings for Zero-Shot Image Classification

Nour Karessli, Zeynep Akata, Bernt Schiele, and Andreas Bulling

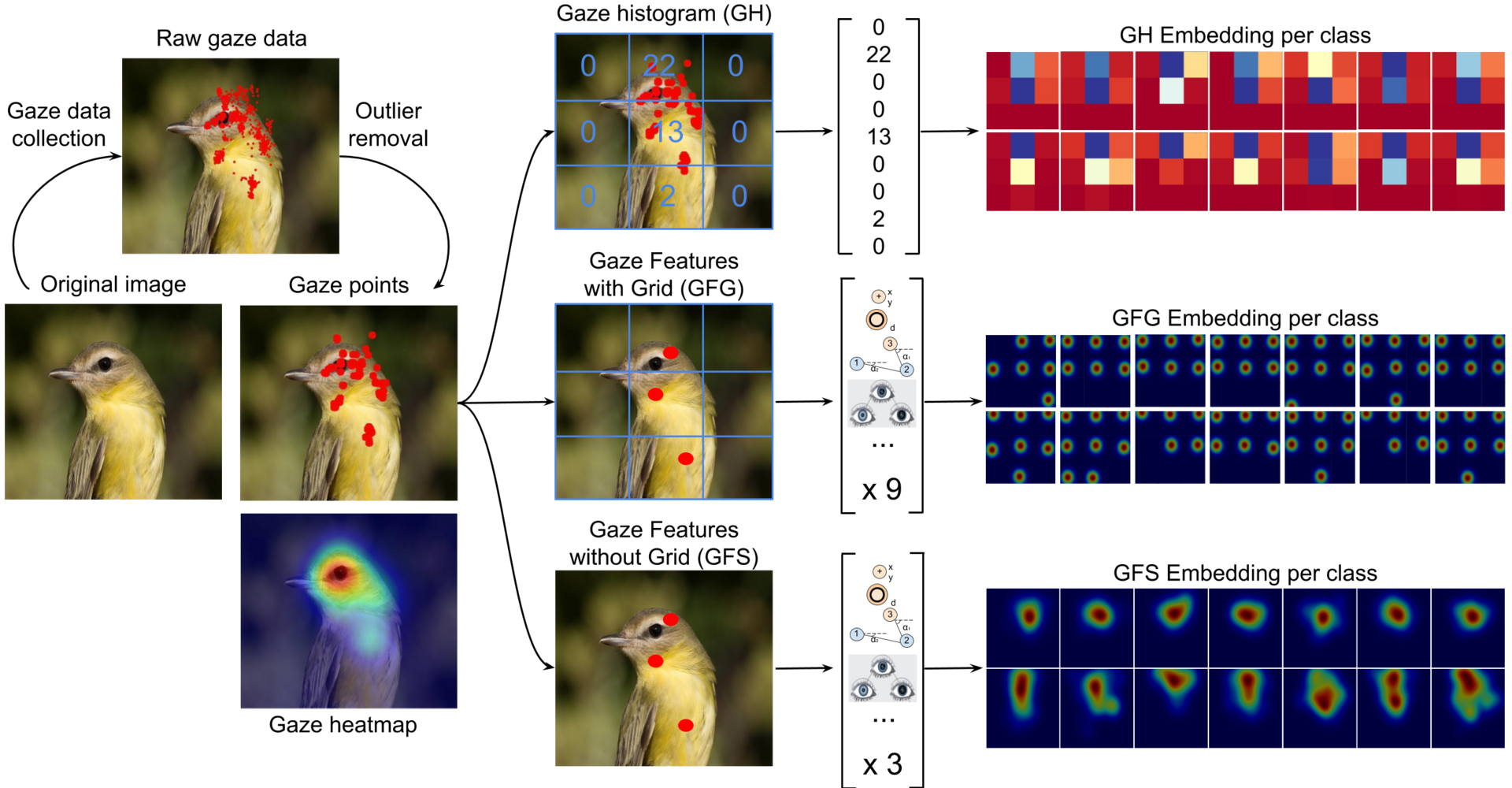

Zero-shot image classification using auxiliary information, such as attributes describing discriminative object properties, requires time-consuming annotation by domain experts. We instead propose a method that relies on human gaze as auxiliary information, exploiting that even nonexpert users have a natural ability to judge class membership. We present a data collection paradigm that involves a discrimination task to increase the information content obtained from gaze data. Our method extracts discriminative descriptors from the data and learns a compatibility function between image and gaze using three novel gaze embeddings: Gaze Histograms (GH), Gaze Features with Grid (GFG) and Gaze Features with Sequence (GFS). We introduce two new gaze-annotated datasets for fine-grained image classification and show that human gaze data is indeed class discriminative, provides a competitive alternative to expert annotated attributes, and outperforms other baselines for zero-shot image classification.

Paper, Code and Data

- If you use our code, please cite:

@inproceedings {karessliCVPR17,

title = {Gaze Embeddings for Zero-Shot Image Classification},

booktitle = {IEEE Computer Vision and Pattern Recognition (CVPR)},

year = {2017},

author = {Nour Karessli and Zeynep Akata and Bernt Schiele and Andreas Bulling}

}