Evaluation of Output Embeddings for Fine-Grained Image Classification

Zeynep Akata, Scott Reed, Daniel Walter,

Honglak Lee and Bernt Schiele

Abstract

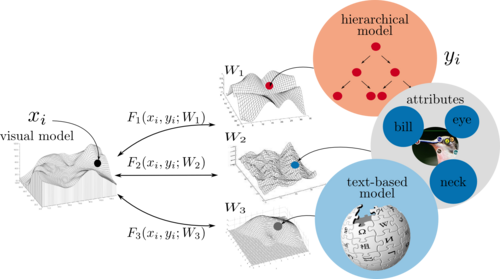

Image classification has advanced significantly in recent years with the availability of large-scale image sets. However, fine-grained classification remains a major challenge due to the annotation cost of large number of fine-grained categories. In this work, we show that compelling classification performance can be achieved on such categories even without labeled training data. Given image and class embeddings, we learn a compatibility function such that matching embeddings are assigned a higher score than mismatching ones; zero-shot classification of an image proceeds by finding the label yielding the highest joint compatibility score. We use state-of-the-art image features and focus on different supervised attributes and unsupervised output embeddings either derived from hierarchies or learned from unlabeled text corpora. We establish a substantially improved state-of-the-art with continuous attributes on the Animals with Attributes and Caltech-UCSD Birds datasets. Most encouragingly, we demonstrate that purely unsupervised output embeddings (learned from Wikipedia and improved with fine-grained text) achieve compelling results, even outperforming the supervised state-of-the-art. Moreover, by systematically combining different output embeddings, we further improve results.

Code and Data for Structured Joint Embeddings for Fine-Grained Zero-Shot Learning

- Download Structured Joint Embedding (SJE) code and the data. GoogleNet features.

- If you use our code, please cite:

@inproceedings {ARWLS15,

title = {Evaluation of Output Embeddings for Fine-Grained Image Classification},

booktitle = {IEEE Computer Vision and Pattern Recognition},

year = {2015},

author = {Zeynep Akata and Scott Reed and Daniel Walter and Honglak Lee and Bernt Schiele}

} References

[1] Evaluation of Output Embeddings for Fine-Grained Image Classification, Z. Akata, S. Reed, D. Walter, H.Lee and B.Schiele, CVPR 2015